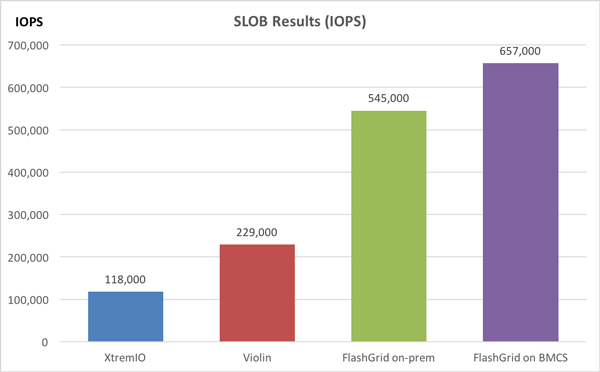

Our first results of measuring performance of a 3-node Oracle RAC on Oracle’s own Bare Metal Cloud (BMCS) with FlashGrid software are quite spectacular! We focus our testing on the I/O performance because CPU performance is generally the same whether the servers are on-premise or in the cloud. I/O in the cloud is more challenging and considered much slower compared to the on-premise. But things are changing fast. Just look at our results.

Calibrate_IO

- Max IOPS = 6,413,125 – that is 6.4 million IOPS

- Latency = 0 – meaning it is below the measurements threshold for the tool

- Max MB/s = 55880 – almost 60GB/s (yes, that is 18 times faster than the 3 GB/s of EMC XtremIO flash array!)

SLOB

- Physical Reads per sec: 537,000

- Physical Writes per sec: 120,000

It is interesting to compare these numbers with the results of on premise deployments. The common perception has been that an in-house data center is faster than an IaaS deployment. Looking at our results it is clear that it is no longer true: Oracle BMCS DenseIO instances unleash the full power of NVMe flash devices and literarily leave the traditional flash arrays in the dust.

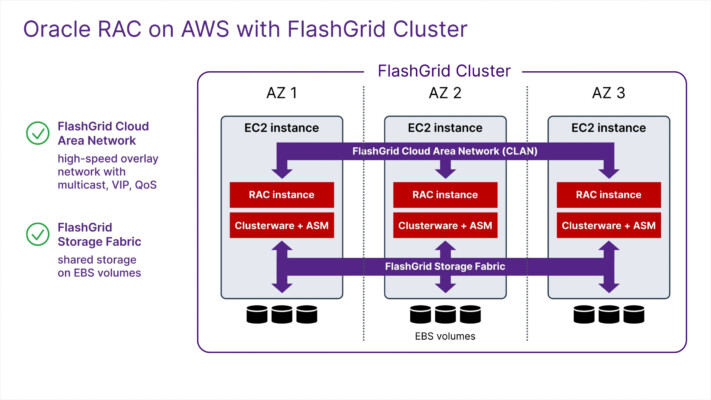

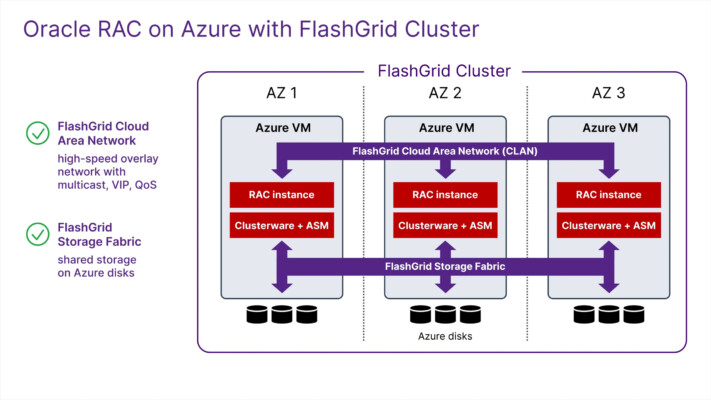

Here is what it takes to get these results:

- Three DenseIO.36 instances (each has nine 3.2TB NVMe SSDs)

- Oracle Linux 7

- Oracle Grid Infrastructure 12.1 and Oracle Database 12.1

- FlashGrid Cloud Area Network software

- FlashGrid Storage Fabric software with FlashGrid Read-Local Technology (our secret sauce behind the 55 GB/s)

But performance alone is not enough. We also need capacity and HA. In this configuration we have 25.6 TB of usable capacity. And the data is mirrored by Oracle ASM across the three nodes, each in a separate Availability Domain. Yes, three copies of every data block at different physical sites. Not bad!

Some configuration and test details are below.

P.S. On February 9, 2017 we are showing a live demo at Oracle Cloud Day / NoCOUG at Oracle headquarters in Redwood Shores.